In partnership with Microsoft Tech for Social Impact, Submittable developed three AI powered tools that enable our users to eliminate some of the busy work of grant making: Form Auto Fill, AI Assistant, & Smart Import.

Through evaluative research and usability testing, I led research that identified critical usability issues in our beta AI features, and set long-term design standards for Submittable’s approach to AI.

OVERVIEW

Form Auto Fill

Applicants can track their commonly used application answers. When completing subsequent grant applications, they can autofill or copy & paste from a saved answer library.

AI Assistant

Grant makers can create AI-generated application forms in English, Spanish, German, and French for immediate use or as a starting point for more complex forms.

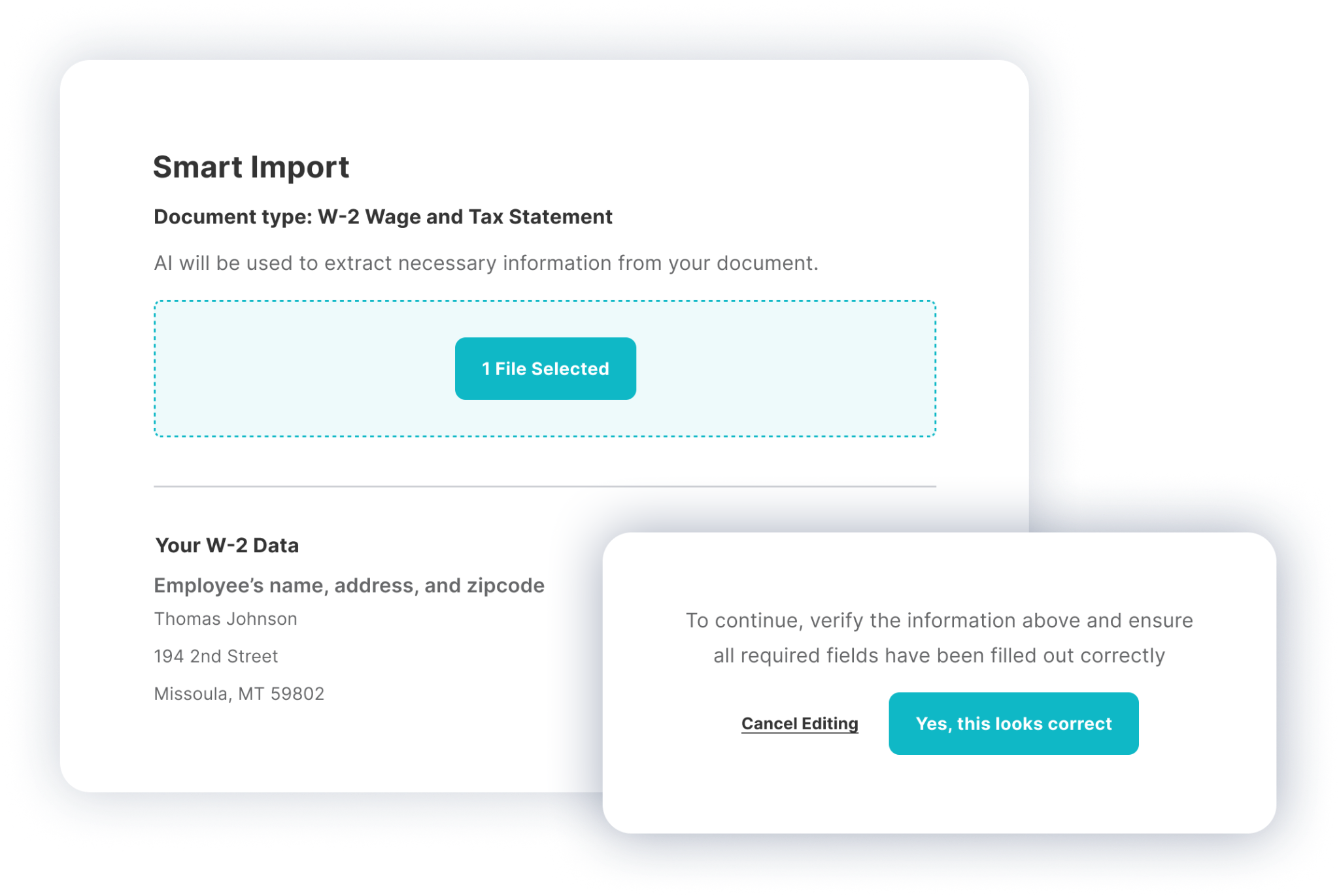

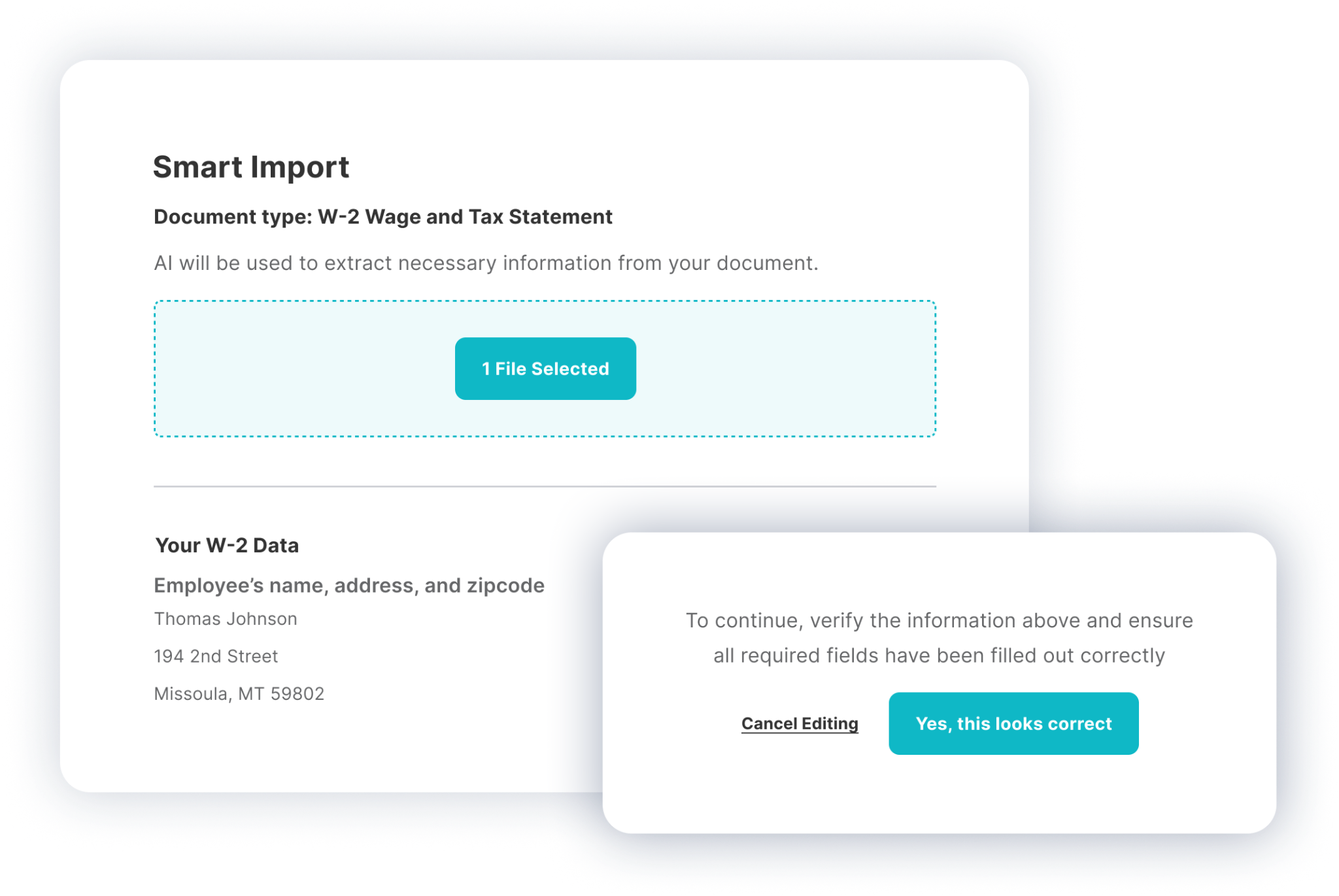

Smart Import

Applicants can easily upload W2 or 1099 forms, which are automatically parsed into form fields and available to view by grantors for automation and reporting.

CONTEXT

As a Microsoft Tech for Social Impact partner, Submittable seized the opportunity to amplify our impact and connect with a network of ~ 400,000 nonprofits. As a part of this partnership, we launched our own 'AI for Good' initiative and built three AI-enabled tools that empower mission-driven organizations doing social impact work.

This initiative also came with tight timelines and constraints on engineering time, meaning we had limited opportunities to test with users and lacked real feedback on our design direction. Ahead of the initiative’s official launch, I led evaluative research on these three in-development features to test our riskiest assumptions.

MY WORK

I led concept and usability tests with 4 grant makers and 4 grant seekers that exposed critical usability and accessibility flaws.

⌛ Designed a ‘within-subjects’ study to maximize learnings per participant due to timeline constraints

📏 Created a benchmark for both ease of use and perceived value using a 5-point Likert scale to measure improvement across both metrics over time.

✔️ Defined 5 foundational tasks and success criteria to reliably assess task completion/failure rates.

IMPACT

This research de-risked our AI launch by identifying where our designs missed the mark and establishing usability benchmarks for long term performance monitoring.

⚙️ Tagged research recommendations with levels of urgency to ensure launch milestones were met without compromising user experience.

🔊 Included differently abled participants in the recruit, uncovering critical accessibility issues impacting JAWS screen reader compatibility

🧪 Initiated longitudinal beta program to assess usability in real-use and long term value of features over time